FIFAI II: A Collaborative Approach to AI Threats, Opportunities, and Best Practices, Workshop 3 - AI and Financial Stability

AI is a transformative force – both awe-inspiring and potentially perilous. Its true impact will hinge on disciplined, responsible innovation and robust collaboration across borders and sectors.

Preface

On October 29, 2025, the Office of the Superintendent of Financial Institutions (OSFI), the Department of Finance Canada (Finance Canada), the Bank of Canada (BOC), and the Global Risk Institute (GRI) co-hosted a workshop on the impact of AI on financial stability. This third workshop in the Financial Industry Forum on Artificial Intelligence II (FIFAI II) series brought together over 50 Canadian and international experts from the financial sector, federal and provincial supervisors and regulators, academics, and business.

This workshop’s purpose was to:

- Analyze how AI adoption amplifies financial stability challenges and identify appropriate risk mitigation measures alongside potential uses of AI to reinforce financial system robustness.

For purposes of this workshop, “financial stability” was definedFootnote 1 as:

- A stable and resilient financial system that continues to provide critical services to support the economy in normal times and during periods of stress. As such, a stable financial system is capable of absorbing shocks rather than amplifying them, reducing the need for official intervention.

The sponsors sought participant perspectives on i) the amplification effects of AI on financial stability risks, ii) potential mitigation options, iii) identifying stability-enhancing uses of AI, and iv) priorities that should be identified as “next steps”.

FIFAI I originated in 2022, driven by the urgent need for collaboration and development of best practices in the responsible adoption of Artificial Intelligence (AI) in the financial industry. Four workshops were held, resulting in a report titled “A Canadian Perspective on Responsible AI.”

In early 2025, a second phase of the forum, FIFAI II: A Collaborative Approach to AI Threats, Opportunities, and Best Practices, was planned. The first workshop, held in May 2025, identified opportunities, risks, and threats related to security and cybersecurity. The second workshop, held in October, focused on combatting AI-enhanced financial crime.

A fourth workshop on consumer protection risks related to AI was held on November 13th, with a report to follow. A final report on the insights and conclusions from FIFAI II will also be released in March 2026.

AI adoption presents both opportunities and risks for the financial sector. The sponsors sincerely thank all workshop participants and speakers for their engagement in this collaborative dialogue.

Global Risk Institute

Office of the Superintendent of Financial Institutions

Department of Finance Canada

Bank of Canada

AI adoption and financial stability

Most workshop participants agreed that, without appropriate mitigation, AI could heighten financial stability risks.

They identified three avenues through which AI risks could materialize. The first stems from the internal adoption of AI by regulated financial institutions. The second emerges when other actors deploy AI technology that affects the regulated financial system. The third concerns the systemic vulnerability of shared infrastructure that financial institutions depend upon.

Participants emphasized that broader AI adoption will change how financial institutions operate and markets function, with implications for the management of institutional and systemic risks. Mitigating AI’s potential to amplify the risks to financial stability will require a dedicated focus on robust governance, strong process controls, a deep risk-awareness culture, and an understanding of system-level dynamics.

Participants identified key areas that industry, government, and regulators should address to minimize potential adverse impacts of AI on Canada’s financial stability.

Five areas where AI could amplify systemic risk

1. AI-related challenges to systemic operational resilience

The ability to detect, respond to, recover from, and adapt to operational shocks is essential for any financial institution. The rapid growth of AI-dependent services and applications is raising the stakes not just for individual institutions but for the system as a whole.

According to workshop participants, the growing overall dependence on critical third parties for AI services, along with downstream “nth party” dependencies in the AI supply chain, amplifies financial stability risk. The same can be said for critical infrastructure providers like payment platforms, which may also be using AI tools, potentially opening the door to vulnerabilities that could affect the system.

Third-party concentration of AI service providers is a significant threat to operational resilience. Canada’s financial sector relies on a concentrated set of AI suppliers, including large language model (LLM) developers, cloud services, and hardware supply chains. Concentration risks are compounded when LLMs are trained on similar datasets or ingest similar data once deployed, increasing the likelihood of correlated failures and system-wide amplification. This reliance on a small number of global AI and cloud service providers increases the risk of a single point of failure, which could become systemic.

Furthermore, most of these service providers operate outside the scope of regulationFootnote 2, which results in limited visibility into the likelihood and potential impact a failure could have across the system. This also creates a challenge for institutions that need to assume responsibility for risk management and regulatory compliance for the services they contract externally.

Given the limited number of suppliers and vendor lock-in constraints, resolving this risk is not as simple as imposing AI provider diversification requirements. Participants generally agreed that supplier choice should be market-driven and aligned with a firm’s strategic objectives. It creates a challenging starting point for mitigating the risks.

Recommended mitigation options

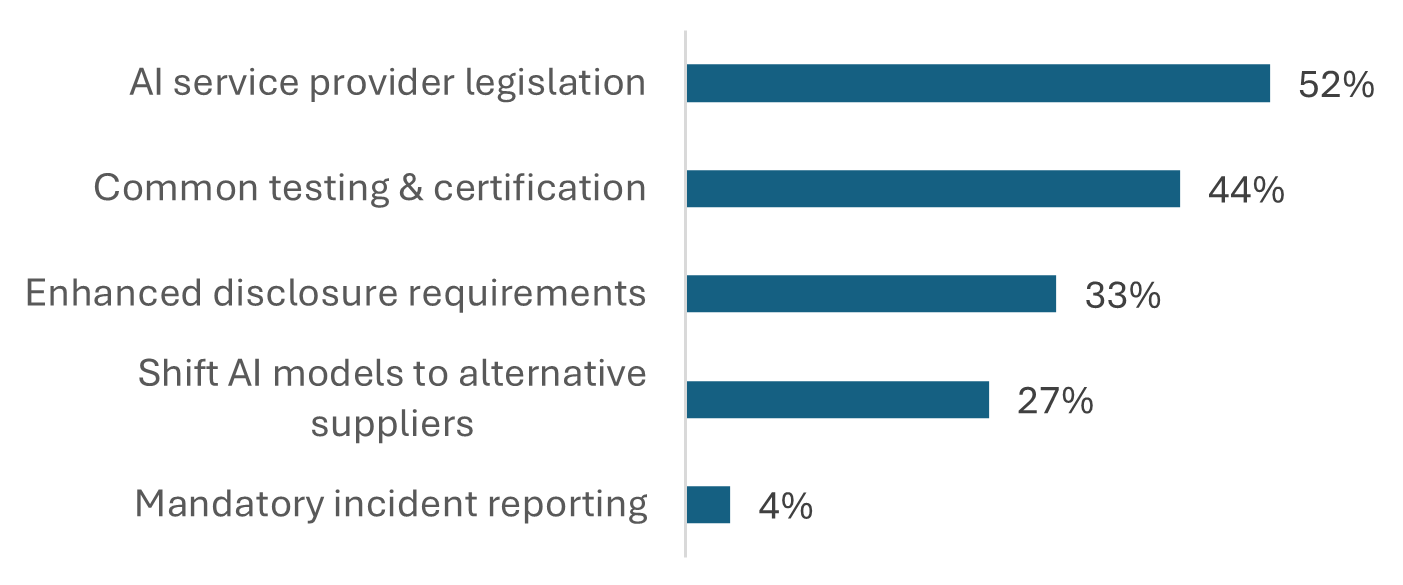

When asked, “What action would most strengthen resilience against third-party risks?”, 52% of workshop participants supported new legislation to regulate third-party AI service providers.

Numbers add to >100 as participants could select 2 options

Figure 1 - Text version

| AI service provider legislation | 52% |

|---|---|

| Common testing & certification | 44% |

| Enhanced disclosure requirements | 33% |

| Shift AI models to alternative suppliers | 27% |

| Mandatory incident reporting | 4% |

Participants highlighted three initiatives to mitigate the operational resilience challenges that amplify financial stability risk:

- Ensure the effectiveness of third-party related contingency plans and robust business recovery frameworks. This includes testing those plans rigorously under a range of severe but plausible scenarios.

- Ensure that operational crisis response plans incorporate sufficient collaboration among regulators with clearly defined inter-agency roles, responsibilities and decision-making authority. And,

- Establish heightened governance controls and monitoring for individual institutions and at a systemic level. This would entail determining which third-party dependencies are critical to the system and setting up mechanisms that allow for rapid coordination and communication in case of incidents with consequences to the system.

2. AI-induced increases in market volatility

Heightened volatility during periods of stress may be amplified by synchronized market decisions from AI models trained on similar data. These dynamics could contribute to declining trust, market panics, and reduced liquidity when it is needed most.

AI-induced market volatility was viewed as a systemically significant risk. AI-based trading algorithms or models may intensify market volatility, particularly short-term price fluctuations. Participants believed that equity markets and exchange-traded derivatives could be particularly vulnerable to AI-driven disruption and to procyclical shifts during periods of stress. In addition, unregulated market participants, including new entrants, could leverage complex AI tools at scale and more quickly than regulated financial institutions. These entities may lack robust risk management frameworks and responsible AI practices, thereby potentially increasing risks in financial markets and risk concentration.

It is worth noting, however, that some participants believe that volatility is a fundamental part of their business models and noted that existing controls and technical thresholds reduce the likelihood of systemic herding. Canadian financial institutions have also developed proprietary AI trading models and strategies that are not reliant on third parties, which should help mitigate herding and other correlated behaviours.

Recommended mitigation options

Five initiatives were highlighted to reduce potential AI-related market volatility amplifications:

- Implement early warning systems to detect emerging changes in market dynamics, such as changes in transaction value, velocity, and volume.

- Ensure that circuit breaker mechanisms have clear thresholds to respond to extreme volatility.

- Promote the development and use of in-house-trained trading algorithms with proprietary approaches rather than exclusively relying on external models and data.

- Ensure strong risk governance processes that include controls for trading model development and to prevent undesirable trading outcomes with “human-in-the-loop” standards for high-risk decisions. And,

- Explore expanding regulatory boundaries to cover unregulated market participants where their use of AI could amplify systemic risks.

3. Risks from Agentic AI

The risks stemming from increasingly complex, more autonomous AI models were discussed as a potential threat to financial stability. This includes the development of agentic AI by financial institutions and the impact of agentic AI systems developed by technology companies that perform financial actions. Agentic AI is distinct from other AI systems in its increasing ability to autonomously pursue goals through multi-step decision-making, tool use, and task execution.

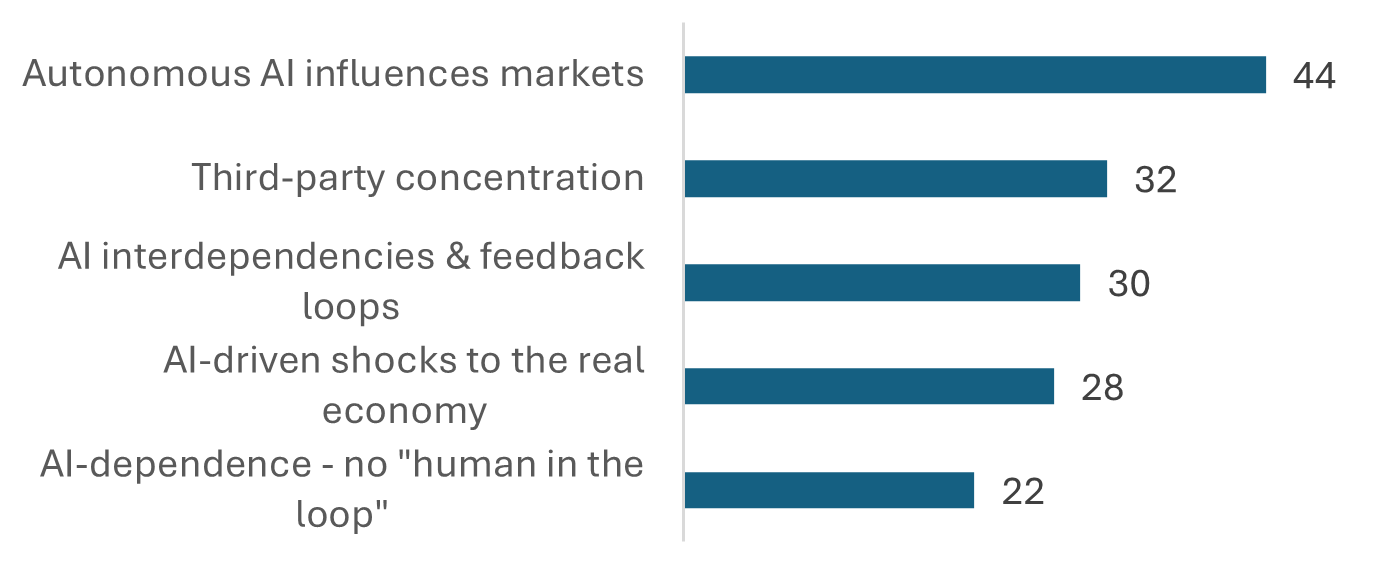

44% of participants identified that the primary source of AI-related systemic risk today is most likely to stem from autonomous AI systems.

Numbers add to >100 as participants could select 2 options

Figure 2 - Text version

| Autonomous AI influences markets | 44% |

|---|---|

| Third-party concentration | 32% |

| AI interdependencies & feedback loops | 30% |

| AI-driven shocks to the real economy | 28% |

| AI-dependence - no "human in the loop" | 22% |

The autonomous behaviour of agentic AI systems can result in unanticipated outcomes, raising concerns ranging from decision bias to market manipulation. Its rapid response capabilities and autonomy may make it a challenge to monitor and oversee. The ability of agentic AI to execute material transactions without a human in the loop is seen as a potential contributor to systemic issues.

Workshop participants expressed concern that there may be insufficient safeguards to monitor and control AI agent capabilities. They compared the risks of agentic AI to those associated with a rogue trader and suggested it should trigger similar risk-management measures. A keynote presentation noted that AI systems may exploit loopholes in how their objectives are defined, thereby evading controls, limits, and safeguards. The presenter warned that reliance on current regulations related to intent is not a responsible approach to addressing this risk.

Data and data quality are fundamental to minimizing AI-deployment risk. This is hampered if institutions do not sufficiently prioritize data quality for training AI models. Data, in general, can be poorly organized, hard to access, or of insufficient quality. This risk is heightened in agentic systems, where reliable, sufficiently diverse training data can be difficult to procure. When technology monitoring and oversight measures are lacking, the risk of manipulation or contamination by threat actors increases. As AI agents gain increasing access to tools and internal systems, the scale and scope of the risk are magnified.

While all generative AI models are subject to hallucination risks, errors compound in agentic systems, particularly when multiple agents are collaborating on a multi-step workflow. The training of agents further adds to the lack of transparency and explainability already present in the base AI models. Furthermore, diagnosis is challenging as replicating the decision pathways is virtually impossible.

AI agents developed by external entities, such as technology companies, could also introduce risks. For example, retail investors may benefit from access to agentic AI trading tools, as it could lower barriers to more sophisticated and active market engagement. However, individual investors, relying on such tools, may also inadvertently contribute to increased volatility and risk. Another example of risky Agentic AI use is in corporate treasury activities. Such AI agents may reduce the stability of corporate deposits on financial intermediaries’ balance sheets if they respond more actively to news, social media rumours, or posted market rates.

Recommended mitigation options

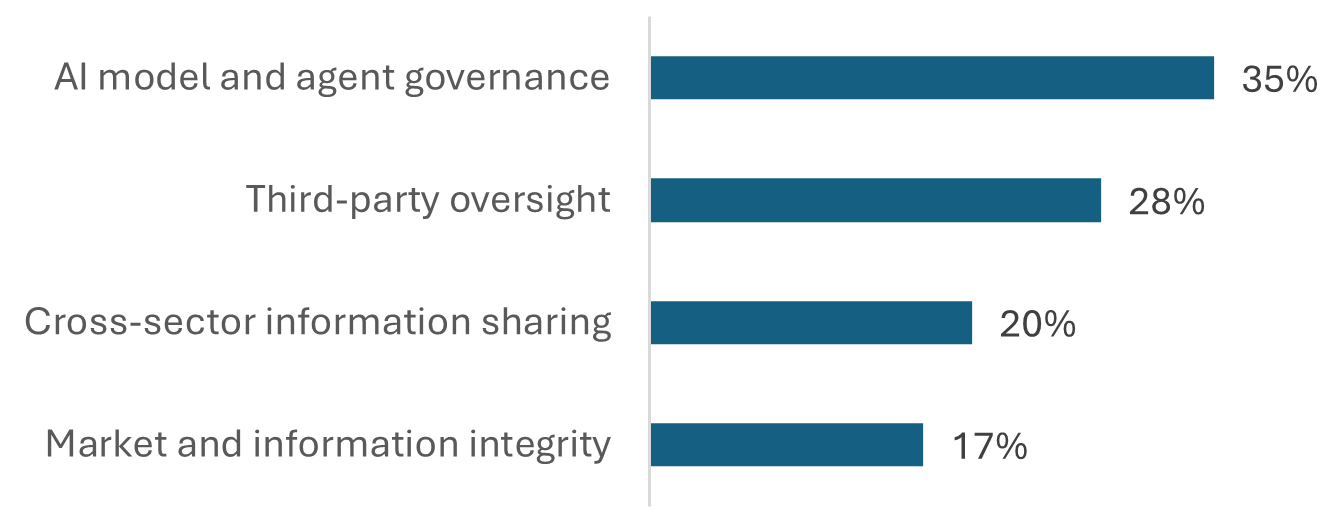

When asked about their preferred policy coordination and oversight mitigants, 35% of participants selected AI model and agent governance.

Numbers add to >100 as participants could select 2 options

Figure 3 - Text version

| AI model and agent governance | 35% |

|---|---|

| Third-party oversight | 28% |

| Cross-sector information sharing | 20% |

| Market and information integrity | 17% |

The following strategies were identified to mitigate financial stability risks from agentic AI:

- Adopt continuous, AI-powered monitoring and controls for Agentic AI to reduce spheres of action and avoid overreliance. For example, ensure agents have distinct digital identities and ensure that when one agent oversees another, the underlying models are different.

- Encourage AI literacy and role-specific training before Agentic AI systems are deployed.

- Establish clear control guidelines about what decisions require human approval (human-in-the-loop), and any functions where autonomous AI agents should not be deployed. And,

- Address transparency gaps and enhance accountability; blockchain could potentially be one means of achieving this.

4. AI-related disruptions to labour markets and business sectors

Participants agreed that economy-wide AI adoption may cause significant disruption to the labour market and to businesses in sectors significantly impacted by AI. Economic disruptions from AI could increase credit risk for financial institutions via their lending exposures to adversely affected individuals and businesses. The scale, timing and duration of such economic disruptions will dictate the extent of the problem.

Some participants viewed labour disruptions and the associated increase in credit risk as the primary driver of potential AI-related systemically significant shocks. An over-reliance on AI tools could exacerbate any skills mismatch in the labour market, leading to loss of employee skills and talent shortages in emerging roles. In a scenario where job losses accumulate, financial institutions may need to recalibrate their credit risk assessments and the risk factors they use.

Participants suggested that the consequences could lead to a “K-shaped” economy, where some sectors see companies and individuals flourish while others face shrinking opportunities. It was noted that this dynamic is generally unfavourable to lenders, as there is limited upside from exposure to individuals and businesses that are flourishing, with potentially significant downside from exposure to those who are adversely affected.

Recommended mitigation options

Identified best practices to mitigate AI-induced labour market disruption and loss of trust were:

- Improve stress-testing practices to account for scenarios where the effects of broader AI adoption can have material macroeconomic impacts.

- Focus on education and communication to increase job and upskilling opportunities.

- Enhance data sharing between industry and government to better link job loss with potential credit risk.

- Emphasize the importance of monitoring and identifying early-warning metrics and then taking effective action on emerging credit risks. And,

- Explore incentives to encourage financial institutions to redeploy rather than displace existing employees affected by AI-induced job loss.

5. Challenges in adopting AI rapidly and prudently

Many financial institutions, as well as government and regulators, face the challenge of balancing rapid AI adoption with advancing AI oversight, governance, and internal expertise. Innovation and AI adoption are essential to improving the productivity and competitiveness of Canada’s financial sector, and moving too slowly, whether due to conservative decision-making or other factors, could be just as detrimental as adopting AI too rapidly without properly managing risks.

Participants stressed that innovation and AI adoption were mission-critical for the future success of Canada’s financial sector. Workshop discussions frequently expressed the view that “the biggest risk is not doing enough”, while emphasizing the importance of balancing between the need to innovate at a fast pace and the need to do so responsibly.

Institutions require sufficient capital, AI expertise, infrastructure and robust governance to implement AI effectively and responsibly. Participants emphasized that a lack of organizational commitment to actively and responsibly adopt AI could pose significant operational and strategic risks with possible implications for financial stability. A primary reason for AI adoption by financial institutions is to combat growing external AI-enabled risks from cybersecurity, fraud and other areas (see reports available: Workshop 1 - Security and Cybersecurity and Workshop 2 - Financial Crime). In addition, some participants expressed the view that a failure to adopt AI in their business could represent material strategic risks if competitors were to gain a significant advantage by better leveraging the technology.

Regulators and government also need to adopt AI at the right pace to ensure effective oversight and financial stability in Canada. Financial regulators are striving to balance supporting responsible technological innovation at financial institutions while ensuring such efforts comply with the principles of existing regulatory guidelines.

Some financial sector participants expressed concerns about their ability to adopt AI systems rapidly. First, there is a shortage of skilled AI talent, worsened by challenges related to AI literacy and a perceived lack of internal training and upskilling across the industry. Second, there is intense internal competition for investment and R&D budgets within institutions. Participants also questioned whether the industry has the capacity to align AI skills with the pace of adoption. They stressed the need for regulatory flexibility that incentivizes responsible adoption with appropriate controls.

Recommended mitigation options

- Focus on the fundamentals of governance and risk management that align AI deployment with suitable risk controls.

- Promote investing in and adopting AI tools and systems responsibly, especially to counter the increased threats from malicious actors using AI.

- Intensive focus on AI education, communication, and skills development within individual institutions and across the sector. And,

- Ensure that multidisciplinary teams support AI deployment to promote strategic alignment, clarify segregation of duties, and minimize fragmentation risk.

Opportunities and priorities to reduce Financial Stability risk

The question before you is: are your institutions bold enough to capture value for your customers and shareholders? Or are you hiding behind incrementalism – waiting for a regulator to propose rather than challenging your regulator with new innovations?

Several opportunities were highlighted to enhance resilience and financial stability:

- Broaden Canadian regulatory authority over critical third parties.

- Participants urged exploring the expansion of Canada’s regulatory regime to include critical third-party suppliers to mitigate concentration risk. The UK’s 2024 Critical Third Parties Regime, which sets service and resilience standards for critical third parties, was one recommended model. This would include increased scrutiny of critical AI and technology infrastructure, possibly designating parts of the financial sector or AI infrastructure as “systemically important" for government oversight.Footnote 3

- Enhanced national response capability for critical financial infrastructure.

- A review of existing national functions for responding to a systemic incident from a critical third-party or other critical financial infrastructure, given the elevated risks stemming from AI to the financial sector, was proposed. This could potentially take the form of a national AI coordination group, a disaster recovery plan playbook, or just a framework specifically for the financial system. Part of this effort could be determining what are the critical service providers to the financial industry and monitoring of incidents.

- Leveraging supervisory AI tools.

- One area where supervisors could deploy AI tools is to map and monitor third-party dependencies and incidents in real time, linking institutions with providers, heightening visibility and early warning into systemic vulnerabilities. AI could also be used to monitor market trends and identify early potential volatility or liquidity incidents. Another area could be the use of AI to monitor the growth of activity by agentic systems, especially those deployed by entities outside the regulated system. Supervisory AI systems could also help monitor AI-related impacts on labour markets and the broader economy.

- AI sandbox opportunities.

- Canadian financial institutions have primarily focused on lower-risk AI use cases around operational efficiency and process improvement. A sandbox environment may allow opportunities to test AI tools before deploying them in market-facing systems or in other higher-risk domains.

- Adopting an AI growth mindset.

- While AI is often conceptualized as a means of reducing costs and driving efficiency, it can also represent an unprecedented opportunity for growth and increased competitiveness. Financial institutions can embrace this mindset both in how they develop their strategic plans and in how they manage change transitions for their workforces. Improving existing services and offering new services is an optimal way to deploy both human and technological resources to grow revenue.

- Collaboration across the industry to strengthen financial stability.

- Participants called for increased collaboration, bringing together industry, regulators, policymakers, technology companies, and academia to potentially share AI dependencies, promote information sharing, develop taxonomies, common metrics, and establish third-party certification standards.

- AI Skills and Literacy Development.

- There is an ongoing need to increase the development of AI skills and literacy within the financial industry, its supervisory regime, and in Canada more broadly. Fostering education and training could help smooth labour-market disruptions. Furthermore, strengthening skill sets for risk management and other functions would lead to better monitoring and oversight.

Next Steps

Today's forum is a great step toward a better understanding of AI, its role in the financial industry, and mitigating the risks it represents to financial stability effectively. A better understanding can dispel unfounded fears and enable policymakers to focus on real problems.

Participants emphasized that AI could amplify risks to financial stability. The workshop discussions helped clarify the nature of the risks, potential mitigations, and opportunities for the sector to move forward and thrive in an era of unprecedented technological change.

The financial industry’s immediate challenge is to balance the critical need for innovation and AI adoption with effective AI governance and risk management. Setting appropriate guardrails is essential to ensure institutional and systemic stability, growth, and innovation. Furthermore, institutions and prudential authorities alike need to vigilantly monitor for signs of systemically significant effects from AI developments.

In March 2026, a final report will be released that will synthesize the forum’s views from the four workshops on the risks, threats, and opportunities that arise from AI adoption in the financial industry.